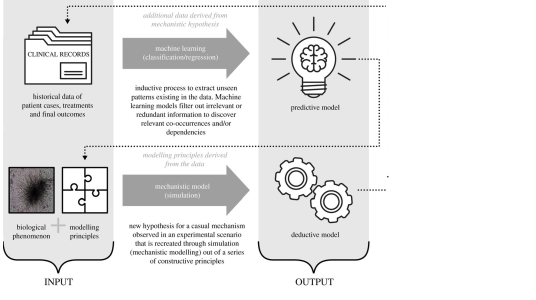

90% of the world’s data have been generated in the last five years. A small fraction of these data is collected with the aim of validating specific hypotheses. These studies are led by the development of mechanistic models focussed on the causality of input-output relationships. However, the vast majority of the data are aimed at supporting statistical or correlation studies that bypass the need for causality and focus exclusively on prediction.

Along these lines, there has been a vast increase in the use of machine learning models, in particular in the biomedical and clinical sciences, to try and keep pace with the rate of data generation. Recent successes now beg the question of whether mechanistic models are still relevant in this area. Why should we try to understand the mechanisms of disease progression when we can use machine learning tools to directly predict disease outcome?

Oxford Mathematician Ruth Baker and Antoine Jerusalem from Oxford's Department of Engineering argue that the research community should embrace the complementary strengths of mechanistic modelling and machine learning approaches to provide, for example, the missing link between patient outcome prediction and the mechanistic understanding of disease progression. The full details of their discussion can be found in Biology Letters.