Oxford Mathematician Joni Teräväinen talks about his work concerning prime factorisations of consecutive integers and its applications in number theory.

"Analytic number theorists study the properties of the positive integers $1,2,3,\ldots$, and in particular the multiplicative structure of this set. From an additive point of view, the positive integers are rather simple: every integer is generated by $1$ (meaning that any number can be written as a sum of some number of ones). But what are the multiplicative generators of the integers? These are the prime numbers $2,3,5,7,11,\ldots$ (meaning that every integer is the product of primes in a unique way), and their distribution is a subtle and interesting question that has been at the centre of analytic number theory research for the past 150 years. The celebrated prime number theorem from 1897 states that the number of primes up to a large number $x$, denoted by $\pi(x)$, is approximately $x/\log x$ (more precisely, the ratio of $\pi(x)$ and $x/\log x$ approaches $1$ as $x$ tends to infinity). But how good exactly is this approximation? It turns out that the optimal answer to that question is equivalent to solving the Riemann hypothesis, one of the great unsolved problems in modern mathematics.

The distribution of prime numbers can be viewed as a purely multiplicative question. However, many of the most notorious conjectures in number theory arise when one combines additive structure with multiplicative structure. As an example, we have the unsolved twin prime conjecture: Are there infinitely many primes $p$ such that $p+2$ is also a prime? The list of such $p$ begins $3,5,11,17,29,41,\ldots$, and the largest known twin prime to date has $388 342$ decimal digits. The twin prime problem combines addition (by looking at the shift $p+2$) with an inherently multiplicative structure, the primes. The resolution of the twin prime conjecture seems to be out of reach (despite spectacular progress by Zhang, Maynard, Tao and others on gaps between primes, demonstrating that $p$ and $p+M$ are infinitely often simulatenously prime for some fixed $M\geq 2$), so one is led to look for related problems that are somewhat more manageable.

The Liouville function $\lambda(n)$ is an important number-theoretic function given by the formula $\lambda(n)=(-1)^{\Omega(n)}$, where $\Omega(n)$ is the number of prime divisors of $n$ (counting multiplicities, so for example $12=2\cdot 2\cdot 3$ has $\Omega(12)=3$). The Liouville function is intimately related to the primes and to multiplicative properties of the integers, since one easily sees that $\lambda(p)=-1$ for all primes $p$ and $\lambda(mn)=\lambda(m)\lambda(n)$ for all $m,n\geq 1.$ For example, one can show that the prime number theorem stated above is equivalent to finding cancellation in the partial sums of $\lambda(n)$ (or more precisely, it is equivalent to $\lim_{x\to \infty}\frac{1}{x}\sum_{n\leq x}\lambda(n)=0$).

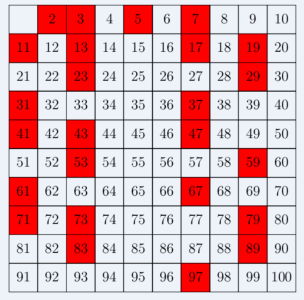

If one looks at the values of the Liouville function up to a large threshold (the sequence starts $+1,-1,-1,+1,-1,+1,-1,-1,+1,+1,\ldots$), it appears to behave just like a random sequence of signs, with no apparent structure in it. This is called the Möbius randomness principle in number theory (because it's often formulated for the Möbius function, a function closely related to the Liouville function $\lambda(n)$). One instance of this principle is that the partial sums of $\lambda(n)$ should behave like the sums of random variables taking values $\pm 1$ with equal probability (so there would be square-root cancellation in the partial sums $\sum_{n\leq x}\lambda(n)$). It has been shown that a rigorous proof of this is equivalent to the Riemann hypothesis, and therefore appears to be out of reach of current methods. A different but equally valid way to specify how the Liouville sequence appears random is to assert that the sequence $\lambda(n)$ forgets its history. This means that the value of $\lambda(n+1)$ is asymptotically independent of the value of $\lambda(n)$ (much in the same way as the $n+1$st result of a series of coin tosses is independent of the $n$th result). The same should hold for longer patterns of shifts, so if someone picks a large integer $n$ and lists you the values of $\lambda(n),\lambda(n+1),\ldots, \lambda(n+9)$, that should be of no use if you're trying to guess the value of $\lambda(n+10)$. A precise version of this statement was conjectured by Chowla in the 1960, and it says the following.

Conjecture (Chowla's conjecture): Let $k\geq 1$. Then for any signs $s_1,\ldots, s_k\in \{-1,+1\}$, the asymptotic proportion of those integers $n\geq 1$ for which $\lambda(n+1)=s_1$, $\lambda(n+2)=s_2,\ldots, \lambda(n+k)=s_k$ is equal to $2^{-k}$.

Chowla's conjecture is closely related to the twin prime conjecture mentioned earlier, but at the same time Chowla's conjecture seems more tractable, due to the multiplicative property of $\lambda(n)$. Note that the twin prime conjecture would certainly imply as a special case that $\lambda(n)=\lambda(n+2)=-1$ infinitely often. Conversely, it is known that a sufficiently strong version of Chowla's conjecture could be used to deduce the twin prime conjecture (but no one has proved Chowla's conjecture, let alone this strengthening of it).

In 2015, Tao was the first one to make significant progress on Chowla's conjecture, building on the work of Matomäki and Radziwill on multiplicative functions. He showed that the case $k=2$ of Chowla's conjecture holds (with the technical modification that he considered the logarithmic probability, rather than asymptotic probability). Thus for example $\lambda(n)=\lambda(n+1)=-1$ holds for exactly $25\%$ of all the integers (again with logarithmic probability). In 2017, in joint work with Tao, we showed that Chowla's conjecture holds for $k=3$ as well (and we showed that a different formulation of it, which states that the correlation sums $\sum_{n\leq x}\lambda(n)\lambda(n+1)\cdots \lambda(n+k-1)$ exhibit cancellation, holds for any odd value of $k$). Again, we had to use the logarithmic probability instead of the asymptotic one. In a later work, we partially removed the restriction to logarithmic probability. Now we can for example say that $\lambda(n)=\lambda(n+1)=\lambda(n+2)=-1$ holds for exactly $12.5\%$ of all the integers.

Chowla's conjecture turns out to be far from being an isolated problem, and in the last few years methods that were originally developed to tackle partial cases of Chowla's conjecture, such as the ones above, have proved to be fruitful for several other problems as well, including some problems outside of number theory. For example, Tao used his work on the $k=2$ case of Chowla's conjecture (or rather a generalisation of it) to settle the Erdős discrepancy problem, a famous open problem in combinatorics. This notorious problem is the superficially simple statement that if you take any sequence $s(n)$ of signs $\pm 1$, then the partial sums $\sum_{n=1}^{j}s(dn)$ can be made arbitrarily large in absolute value by choosing suitable values of $j$ and $d$. In a different interesting development, Frantzikinakis and Host used the previously mentioned work on Chowla's conjecture as an ingredient to make progress on Sarnak's conejcture, an important conjecture in ergodic theory that is also related to the randomness of the Liouville sequence. Clearly, much remains to be studied regarding Chowla's conjecture and its applications and generalisations."

For more on Joni's work with Terry Tao on the structure of logarithmically averaged correlations of multiplicative functions please click here; and for more on their research in to the structure of correlations of multiplicative functions at almost all scales click here.