Neutrino Observatory

Neutrino Observatory

The Oxford Mathematics e-newsletter for December is out. Produced each quarter, it's a sort of 'Now That's What I Call Maths,' pulling together our greatest hits of the last few months in one place.

It's for anyone who wants a flavour of what we do - research, online teaching, public lectures, having a laugh.

And it's COVID-lite. Click here.

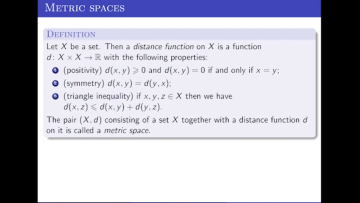

Over the past few weeks we have made 7 undergraduate lectures publicly available, sampling a range of topics from Geometry to Differential Equations. Today & over the next 2 weeks for the first time we're showing a full course on our YouTube Channel. Ben Green's 2nd Year 'Metric Spaces' (the first half of the Metric Spaces and Complex Analysis course)' gets to grips with the concept of distance.

14:00

Dense for the price of sparse: Initialising deep nets with efficient sparse affine transforms

Abstract

That neural networks may be pruned to high sparsities and retain high accuracy is well established. Recent research efforts focus on pruning immediately after initialization so as to allow the computational savings afforded by sparsity to extend to the training process. In this work, we introduce a new `DCT plus Sparse' layer architecture, which maintains information propagation and trainability even with as little as 0.01% trainable kernel parameters remaining. We show that standard training of networks built with these layers, and pruned at initialization, achieves state-of-the-art accuracy for extreme sparsities on a variety of benchmark network architectures and datasets. Moreover, these results are achieved using only simple heuristics to determine the locations of the trainable parameters in the network, and thus without having to initially store or compute with the full, unpruned network, as is required by competing prune-at-initialization algorithms. Switching from standard sparse layers to DCT plus Sparse layers does not increase the storage footprint of a network and incurs only a small additional computational overhead.

--

A link for this talk will be sent to our mailing list a day or two in advance. If you are not on the list and wish to be sent a link, please contact @email.

14:00

Preconditioners for computing multiple solutions in three-dimensional fluid topology optimisation

Abstract

Topology optimisation finds the optimal material distribution of a fluid or solid in a domain, subject to PDE, volume, and box constraints. The optimisation problem is normally nonconvex and can support multiple local minima. In recent work [1], the authors developed an algorithm for systematically discovering multiple minima of two-dimensional problems through a combination of barrier methods, active-set strategies, and deflation. The bottleneck of the algorithm is solving the Newton systems that arise. In this talk, we will present preconditioning methods for these linear systems as they occur in the topology optimization of Stokes flow. The strategies involve a mix of block preconditioning and specialized multigrid relaxation schemes that reduce the computational work required and allow the application of the algorithm to three-dimensional problems.

[1] “Computing multiple solutions of topology optimization problems”, I. P. A. Papadopoulos, P. E. Farrell, T. M. Surowiec, 2020, https://arxiv.org/abs/2004.11797

A link for this talk will be sent to our mailing list a day or two in advance. If you are not on the list and wish to be sent a link, please contact @email.