Stability and minimality for a nonlocal variational problem

Abstract

We discuss the local minimality of certain configurations for a nonlocal isoperimetric problem used to model microphase separation in diblock copolymer melts. We show that critical configurations with positive second variation are local minimizers of the nonlocal area functional and, in fact, satisfy a quantitative isoperimetric inequality with respect to sets that are $L^1$-close. As an application, we address the global and local minimality of certain lamellar configurations.

On the Ramdas layer

Abstract

On calm clear nights a minimum in air temperature can occur just above the ground at heights of order 0.5m or less. This is contrary to the conventional belief that ground is the point of minimum. This feature is paradoxical as an apparent unstable layer (the height below the point of minimum) sustains itself for several hours. This was first reported from India by Ramdas and his coworkers in 1932 and was disbelieved initially and attributed to flawed thermometers. We trace its history, acceptance and present a mathematical model in the form of a PDE that simulates this phenomenon.

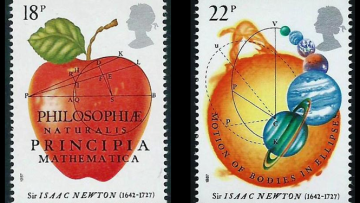

Robin Wilson's entire history of mathematics in one hour, as illustrated by around 300 postage stamps featuring mathematics and mathematicians from across the world.

From Euclid to Euler, from Pythagoras to Poincaré, and from Fibonacci to the Fields Medals, all are featured in attractive, charming and sometimes bizarre stamps.

Recent Advances in Optimization Methods for Machine Learning

Abstract

Optimization methods for large-scale machine learning must confront a number of challenges that are unique to this discipline. In addition to being scalable, parallelizable and capable of handling nonlinearity (even non-convexity), they must also be good learning algorithms. These challenges have spurred a great amount of research that I will review, paying particular attention to variance reduction methods. I will propose a new algorithm of this kind and illustrate its performance on text and image classification problems.