17:30

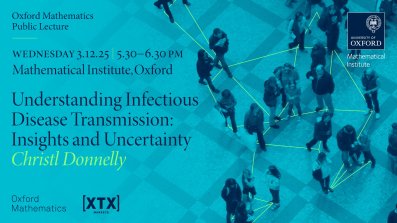

Understanding Infectious Disease Transmission: Insights and Uncertainty - Christl Donnelly

Abstract

How do diseases spread and how can the analysis of data help us stop them? Quantitative modelling and statistical analysis are essential tools for understanding transmission dynamics and informing evidence-based policies for both human and animal health.

In this lecture, Christl will draw lessons from past epidemics and endemic diseases, across livestock, wildlife, and human populations, to show how mathematical frameworks and statistical inference help unravel complex transmission systems. We’ll look at recent advances that integrate novel data sources, contact network analysis, and rigorous approaches to uncertainty, and discuss current challenges for quantitative epidemiology.

Finally, we’ll highlight opportunities for statisticians and mathematicians to collaborate with other scientists (including clinicians, immunologists, veterinarians) to strengthen strategies for disease control and prevention.

Christl Donnelly CBE is Professor of Applied Statistics, University of Oxford and Professor of Statistical Epidemiology, Imperial College London.

Please email @email to register to attend in person.

The lecture will be broadcast on the Oxford Mathematics YouTube Channel on Wednesday 17 December at 5-6 pm and any time after (no need to register for the online version).

The Oxford Mathematics Public Lectures are generously supported by XTX Markets.

Understanding Infectious Disease Transmission: Insights and Uncertainty - Christl Donnelly, Professor of Applied Statistics, University of Oxford and Professor of Statistical Epidemiology, Imperial College London.

Wednesday 03 December 2025, 5.30-6.30 pm Andrew Wiles Building, Mathematical Institute, Oxford

Did you know, you can access free, introductory training on using ChatGPT Edu from in-house specialists?

A regular series of beginner-friendly, 90-minute sessions to help you get started with AI is available online and in person, on an ongoing basis. Over 1,200 staff and students across the University and Colleges have already taken this training, with 97% giving four- or five-star reviews.

In September 2024 we reported that a team of mathematicians from Oxford Mathematics and the Budapest University of Technology and Economics had uncovered a new class of shapes that tile space without using sharp corners. Remarkably, these ’ideal soft shapes’ are found abundantly in nature – from sea shells to muscle cells.

Resonances as a computational tool

Abstract

Speaker Katharina Schratz will talk about 'Resonances as a computational tool'

A large toolbox of numerical schemes for dispersive equations has been established, based on different discretization techniques such as discretizing the variation-of-constants formula (e.g., exponential integrators) or splitting the full equation into a series of simpler subproblems (e.g., splitting methods). In many situations these classical schemes allow a precise and efficient approximation. This, however, drastically changes whenever non-smooth phenomena enter the scene such as for problems at low regularity and high oscillations. Classical schemes fail to capture the oscillatory nature of the solution, and this may lead to severe instabilities and loss of convergence. In this talk I present a new class of resonance based schemes. The key idea in the construction of the new schemes is to tackle and deeply embed the underlying nonlinear structure of resonances into the numerical discretization. As in the continuous case, these terms are central to structure preservation and offer the new schemes strong geometric properties at low regularity.