Oxford Mathematician Vinayak Abrol talks about his and colleagues's work on using mathematical tools to provide insights in to deep learning.

Why it Matters!

From 4.5 billion photos uploaded to Facebook/Instagram per day, 400 hr of video uploaded to YouTube per minute, to the 320GB the large Hadron collider records per second, we know it is the age of big data. Indeed, last year the amount of data existing worldwide is estimated to have reached 2.5 quintillion bytes and while only 30% of this data is described as useful, if analysed only 1% actually is. However, the boom in big data has brought success in many fields such as weather forecasting, image recognition and language translation.

So how do we deal with this big data challenge? To fundamentally understand real-world data, we need to look into the intersection of mathematics, signal processing and machine learning, and combine tools from these areas. One such emerging field of study is 'deep learning' under the broader category of 'artificial intelligence'. There have been remarkable advances in deep learning over the decade, and it has found uses in many day-to-day applications such as on smartphones where we have automatic face detection, recognition, tagging in photos or speech recognition for voice search and setting up reminders; and in homes predictive analysis and resource planning using the Internet of Things or smart home sensors. This shift in paradigm is due to the fact that in many tasks machines are efficient, can work continuously and perform better than humans. However, recently deep neural networks have been found to be vulnerable. For instance, they can be fooled by well-designed examples, called adversarials, which is one of the major risks for applying deep neural networks in, say safety-critical scenarios. In addition most such systems are like a black box without any intuitive explanation, and with little or no innate knowledge about human psychology, meaning that the huge buzz about automation due to artificial intelligence is yet to be justified. Hence, understanding how these networks are vulnerable to attacks is attracting great attention, for example in bio-metric access to banking systems.

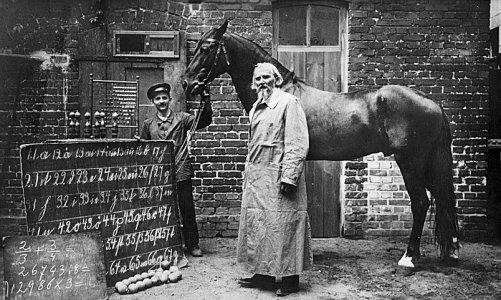

We try to present a different way of approaching this problem. We know deep networks work well in many applications but they can't abstractly reason and generalise about the world or automate ordinary human activities. For instance, a robot which can pick up a bottle, can pick up a cup only if it is retrained (not the case with humans). Hence, we need to understand the limits of such learning systems. Any other questions are meaningless if our resulting system appears to be solving the problem but is actually not. This is dubbed as the 'Clever Hans effect'. So, instead, we are interested in: 1) Does the system actually address the problem? 2) What is the system actually learning? 3) How can we make it address the actual problem?

General Methodology

In Oxford Mathematics, this work is funded under the Oxford-Emirates Data Science Initiative, headed by Prof. Peter Grindrod. I am being supervised by Prof. Jared Tanner, and within the Data Science Research Group we are working to advance our understanding about deep learning approaches. Although, many recent studies have been proposed to explain and interpret deep networks, currently there is no unified coherent framework for understanding their insights. Our work here aims to mathematically study the reasons why deep architectures are working well, what they are learning and are they really addressing the problem they are built for. We aim to develop a theoretical understanding of deep architectures, which in general processes the data with a cascade of linear filters, non-linearities, and contraction mapping via existing well understood mathematical tools. In particular, we focus on the underlying invariants learned by the network such as multiscale contractions, hierarchical structures etc. Our earliest research explored the key concept of sparsity, i.e., the low complexity of high-dimensional data when represented in a suitable domain. In particular we are interested in the learning of representation systems (dictionaries) providing compact (sparse) descriptions for high dimensional data from a theoretical and algorithmic point of view, and in applying these systems to data processing and analysis tasks. Another direction is to employ tools from random matrix theory (RTM) that allow us to compute an approximation of such learning systems under a set of simplifying assumptions. Our previous studies have revealed that the representations obtained at different intermediate stages of a deep network have complimentary information and sparsity plays a very important role in the overall learning objective. In-fact the so called non-linearities and pooling operations which seems complicated can be explained via constrained matrix factorization problems along with hierarchical/sparsity aware signal processing. In terms of the choice of underlying building blocks of such systems such as convolutional networks (popular for image applications), these can be analysed via convolutional matrix factorization and wavelet analysis, and fully connected networks via discrete Weiner and Volterra Series analysis. Overall our main tools come from sparse approximation, RTM, geometric functional analysis, harmonic analysis and optimisation.