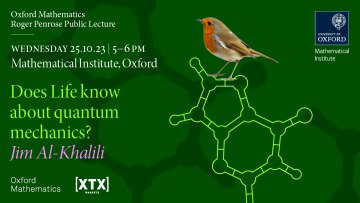

Physicists and chemists are used to dealing with quantum mechanics, but biologists have thus far got away without having to worry about this strange yet powerful theory of the subatomic world. However, times are changing as Jim Khalili describes in this Oxford Mathematics Roger Penrose Public Lecture.

This seminar has been cancelled

Abstract

Data that have an intrinsic network structure can be found in various contexts, including social networks, biological systems (e.g., protein-protein interactions, neuronal networks), information networks (computer networks, wireless sensor networks), economic networks, etc. As the amount of graphical data that is generated is increasingly large, compressing such data for storage, transmission, or efficient processing has become a topic of interest.

In this talk, I will give an information theoretic perspective on graph compression. The focus will be on compression limits and their scaling with the size of the graph. For lossless compression, the Shannon entropy gives the fundamental lower limit on the expected length of any compressed representation.

I will discuss the entropy of some common random graph models, with a particular emphasis on our results on the random geometric graph model.

Then, I will talk about the problem of compressing a graph with side information, i.e., when an additional correlated graph is available at the decoder. Turning to lossy compression, where one accepts a certain amount of distortion between the original and reconstructed graphs, I will present theoretical limits to lossy compression that we obtained for the Erdős–Rényi and stochastic block models by using rate-distortion theory.

Fibring in manifolds and groups

Abstract

Algebraic fibring is the group-theoretic analogue of fibration over the circle for manifolds. Generalising the work of Agol on hyperbolic 3-manifolds, Kielak showed that many groups virtually fibre. In this talk we will discuss the geometry of groups which fibre, with some fun applications to Poincare duality groups - groups whose homology and cohomology invariants satisfy a Poincare-Lefschetz type duality, like those of manifolds - as well as to exotic subgroups of Gromov hyperbolic groups. No prior knowledge of these topics will be assumed.

Disclaimer: This talk will contain many manifolds.

These Famous Five are our 2023-24 cohort of Martingale Postgraduate Foundation Scholars who we are delighted to welcome to Oxford Mathematics. Rik Knowles, Joshua Procter, Charlotte Nash, Callum Marsh and Allan Perez are studying with us on our Mathematical Science MSc course, the first cohort of Martingale Scholars to study with us.

Kaplansky's Zerodivisor Conjecture and embeddings into division rings

Abstract

Kaplansky's Zerodivisor Conjecture predicts that the group algebra kG is a domain, where k is a field and G is a torsion-free group. Though the general sentiment is that the conjecture is false, it still remains wide open after more than 70 years. In this talk we will survey known positive results surrounding the Zerodivisor Conjecture, with a focus on the technique of embedding group algebras into division rings. We will also present some new results in this direction, which are joint with Pablo Sánchez Peralta.

16:00

Maths meets Stats

Abstract

Speaker: Ximena Laura Fernandez

Title: Let it Be(tti): Topological Fingerprints for Audio Identification

Abstract: Ever wondered how music recognition apps like Shazam work or why they sometimes fail? Can Algebraic Topology improve current audio identification algorithms? In this talk, I will discuss recent collaborative work with Spotify, where we extract low-dimensional homological features from audio signals for efficient song identification despite continuous obfuscations. Our approach significantly improves accuracy and reliability in matching audio content under topological distortions, including pitch and tempo shifts, compared to Shazam.

Talk based on the work: https://arxiv.org/pdf/2309.03516.pdf

Speaker: Brett Kolesnik

Title: Coxeter Tournaments

Abstract: We will present ongoing joint work with three Oxford PhD students: Matthew Buckland (Stats), Rivka Mitchell (Math/Stats) and Tomasz Przybyłowski (Math). We met last year as part of the course SC9 Probability on Graphs and Lattices. Connections with geometry (the permutahedron and generalizations), combinatorics (tournaments and signed graphs), statistics (paired comparisons and sampling) and probability (coupling and rapid mixing) will be discussed.

Compactness and related properties for weighted composition operators on BMOA

Abstract

A previously known function-theoretic characterisation of compactness for a weighted composition operator on BMOA is improved. Moreover, the same function-theoretic condition also characterises weak compactness and complete continuity. In order to close the circle of implications, the operator-theoretic property of fixing a copy of c0 comes in useful.