I is for Inverse Problems

The Author

Prof Patrick Farrell

EPSRC Early Career Research Fellow, Applied Mathematics and Associate Professor of Numerical Analysis and Scientific Computing, Oriel College.

Patrick Farrell is a numerical analyst who works on the numerical solution of differential equations. One of his main areas of study is adjoint models.

Adjoints are essential to the solution of most inverse problems, as they allow for the computation of the derivative of the known outputs with respect to the unknown inputs. Adjoints also arise in many other problems, such as optimising the shapes of wings and quantifying the accuracy of nuclear simulations.

Find out more

Crime Fighting Maths is an article in Plus magazine by Chris Budd looking at the application of maths to crime scenes.

King's College London's 100 second science project has Roy Pike explaining Inverse Problems:

Albert Tarantola's book "Inverse Problem Theory" is available as a free pdf download.

Inverse Problems: Activities for Undergraduates has a range of approachable inverse problems.

I is for Inverse Problems

All mathematical models require information to make their predictions; to get something out, you have to put something in. To predict how an earthquake propagates through the ground, you have to know the material properties of the subsurface rocks. To predict the weather at noon, you have to give the initial conditions at dawn. To predict the drag coefficient of an aircraft, you have to specify its shape.

In many cases, however, we are faced with the opposite problem: given information about the outcome of a physical process, how did it come about? Such a problem is called an inverse problem, in contrast to the forward problems given above, for it inverts the relationship between cause and effect encoded in the underlying equations. Think of them as mathematical detective puzzles - we see the crime, and seek the culprit.

It's easy to pick a set of numbers that sum to 27. However, given the sum total 27, it's impossible to know the precise set of numbers without additional information.

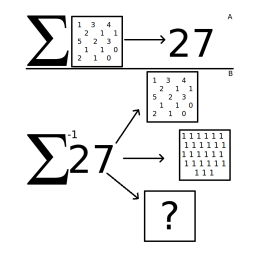

Here are some examples. Given a perturbation to a known electric field, where is the object that caused it, and what is its electrical impedance? This inverse problem of electrolocation is solved by certain species of fish to find food and mates in turbid waters.

Gnathonemus petersii uses electrolocation to find food and mates in murky water.

Given the spectrum of light emitted from a star, what is its chemical composition? Given surface observations of a seismic wave, what is the nature of the rock through which it passed? Inverse problems are solved today to monitor the integrity of nuclear reactors, and could one day be used to determine the chemical composition of distant exoplanets.

Inverse problems are fascinating and ubiquitous. Bats and submariners solve inverse problems to navigate in the dark. Their solution underpins much of modern technology, from subsurface petroleum exploration to medical imaging and the daily weather forecast. They offer a promising way of learning about things we can't easily do experiments on, such as the bottom of the ocean or the interior of the sun.

However, inverse problems are also very difficult mathematically. Forward problems arising in practice are usually well-posed, meaning that they have a sensible unique solution for sensible input data. Unfortunately, inverse problems are almost always ill-posed: there are typically many possible inputs that match the observed outputs. Even when a unique solution exists for perfect data, it is typically unstable to perturbations in the observed outputs; and real-life observations are always partial and corrupted by noise.

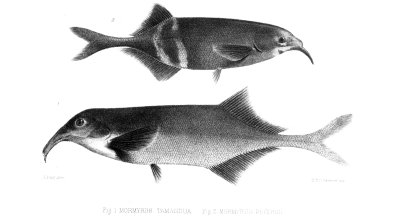

A famous example of the nonuniqueness of inverse problems is exhibited in a challenge posed by Marc Kac in 1966: can one hear the shape of a drum? In a mathematical idealisation, the sound a drum makes is described by the leading eigenvalues of the Laplacian on the domain bounded by a curve $\Gamma$. Given perfect knowledge of the eigenvalues of the Laplacian on an unknown domain, is it possible to reconstruct its shape $\Gamma$? In a landmark result, Carolyn Gordon et al. proved with a counterexample that the answer was no: two nontrivially distinct shapes give rise to exactly the same eigenvalues.

These two different drums produce the same harmonics.

There are two responses to this fundamental character of ill-posedness, the deterministic and Bayesian approaches. The deterministic response is that the problem should be regularised - perturbed to a nearby problem that is well-posed. For example, one might modify the problem to seek the input of minimal size, or the smoothest input, or the closest input to one's current guess, that matches the available observations. This is unsatisfying in several ways. Regularisation always involves an arbitrary and rather subjective choice; of all possible inputs consistent with the data, why should this one be blessed as the unique solution to the problem? Furthermore, changing the strength of the regularisation term added usually changes the character of the solution completely, and it is often not clear how it should be chosen in practice.

The alternative, the Bayesian approach, regards the solution to an inverse problem as a probability distribution over the input space, not a single input. This distribution attaches some probability to any input that matches the observational data to within experimental error. Digesting the observations is fundamentally a Bayesian process, where a prior probability distribution representing our current knowledge is updated to a posterior distribution in light of new information [see B is for Bayesian Inference]. This approach is more philosophically satisfying, but its computational demands are fearsome: characterising the posterior probability distribution in a typical problem might require the solution of hundreds of thousands or millions of forward problems. This cost renders the approach far beyond feasibility for most physical problems. The efficient Bayesian solution of inverse problems is at the forefront of current research in numerical analysis and statistics, with many recent advances and many important discoveries yet to be made.