Bayesian estimation of point processes

The Junior Applied Mathematics Seminar is intended for students and early career researchers.

Abstract

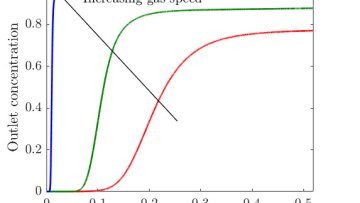

Multivariate point processes are used to model event-type data in a wide range of domains. One interesting application is to model the emission of electric impulses of biological neurons. In this context, the point process model needs to capture the time-dependencies and interactions between neurons, which can be of two kinds: exciting or inhibiting. Estimating these interactions, and in particular the functional connectivity of the neurons are problems that have gained a lot of attention recently. The general nonlinear Hawkes process is a powerful model for events occurring at multiple locations in interaction. Although there is an extensive literature on the analysis of the linear model, the probabilistic and statistical properties of the nonlinear model are still mainly unknown. In this paper, we consider nonlinear Hawkes models and, in a Bayesian nonparametric inference framework, derive concentration rates for the posterior distribution. We also infer the graph of interactions between the dimensions of the process and prove that the posterior distribution is consistent on the graph adjacency matrix.