14:00

Coordinate Deletion

Abstract

For a family $A$ in $\{0,...,k\}^n$, its deletion shadow is the set obtained from $A$ by deleting from any of its vectors one coordinate. Given the size of $A$, how should we choose $A$ to minimise its deletion shadow? And what happens if instead we may delete only a coordinate that is zero? We discuss these problems, and give an exact solution to the second problem.

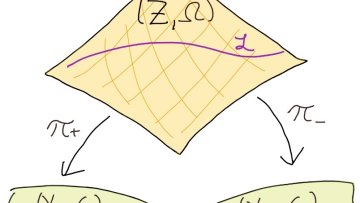

Oxford Mathematician Francis Bischoff talks about his recent work on generalized Kähler geometry and the problem of describing its underlying degrees of freedom.

Jacobian threefolds, Prym surfaces and 2-Selmer groups

Abstract

In 2013, Bhargava-Shankar proved that (in a suitable sense) the average rank of elliptic curves over Q is bounded above by 1.5, a landmark result which earned Bhargava the Fields medal. Later Bhargava-Gross proved similar results for hyperelliptic curves, and Poonen-Stoll deduced that most hyperelliptic curves of genus g>1 have very few rational points. The goal of my talk is to explain how simple curve singularities and simple Lie algebras come into the picture, via a modified Grothendieck-Brieskorn correspondence.

Moreover, I’ll explain how this viewpoint leads to new results on the arithmetic of curves in families, specifically for certain families of non-hyperelliptic genus 3 curves.

11:30

Non-archimedean parametrizations and some bialgebraicity results

Abstract

We will provide a general overview on some recent work on non-archimedean parametrizations and their applications. We will start by presenting our work with Cluckers and Comte on the existence of good Yomdin-Gromov parametrizations in the non-archimedean context and a $p$-adic Pila-Wilkie theorem. We will then explain how this is used in our work with Chambert-Loir to prove bialgebraicity results in products of Mumford curves.

14:15

From calibrated geometry to holomorphic invariants

Abstract

Calibrated geometry, more specifically Calabi-Yau geometry, occupies a modern, rather sophisticated, cross-roads between Riemannian, symplectic and complex geometry. We will show how, stripping this theory down to its fundamental holomorphic backbone and applying ideas from classical complex analysis, one can generate a family of purely holomorphic invariants on any complex manifold. We will then show how to compute them, and describe various situations in which these invariants encode, in an intrinsic fashion, properties not only of the given manifold but also of moduli spaces.

Interest in these topics, if initially lacking, will arise spontaneously during this informal presentation.

Anna Seigal, one of Oxford Mathematics's Hooke Fellows and a Junior Research Fellow at The Queen's College, has been awarded the 2020 Society for Industrial and Applied Mathematics (SIAM) Richard C. DiPrima Prize. The prize recognises an early career researcher in applied mathematics and is based on their doctoral dissertation.

Compressed Sensing or common sense?

Abstract

We present a simple algorithm that successfully re-constructs a sine wave, sampled vastly below the Nyquist rate, but with sampling time intervals having small random perturbations. We show how the fact that it works is just common sense, but then go on to discuss how the procedure relates to Compressed Sensing. It is not exactly Compressed Sensing as traditionally stated because the sampling transformation is not linear. Some published results do exist that cover non-linear sampling transformations, but we would like a better understanding as to what extent the relevant CS properties (of reconstruction up to probability) are known in certain relatively simple but non-linear cases that could be relevant to industrial applications.

Adaptive Gradient Descent without Descent

Abstract

We show that two rules are sufficient to automate gradient descent: 1) don't increase the stepsize too fast and 2) don't overstep the local curvature. No need for functional values, no line search, no information about the function except for the gradients. By following these rules, you get a method adaptive to the local geometry, with convergence guarantees depending only on smoothness in a neighborhood of a solution. Given that the problem is convex, our method will converge even if the global smoothness constant is infinity. As an illustration, it can minimize arbitrary continuously twice-differentiable convex function. We examine its performance on a range of convex and nonconvex problems, including matrix factorization and training of ResNet-18.