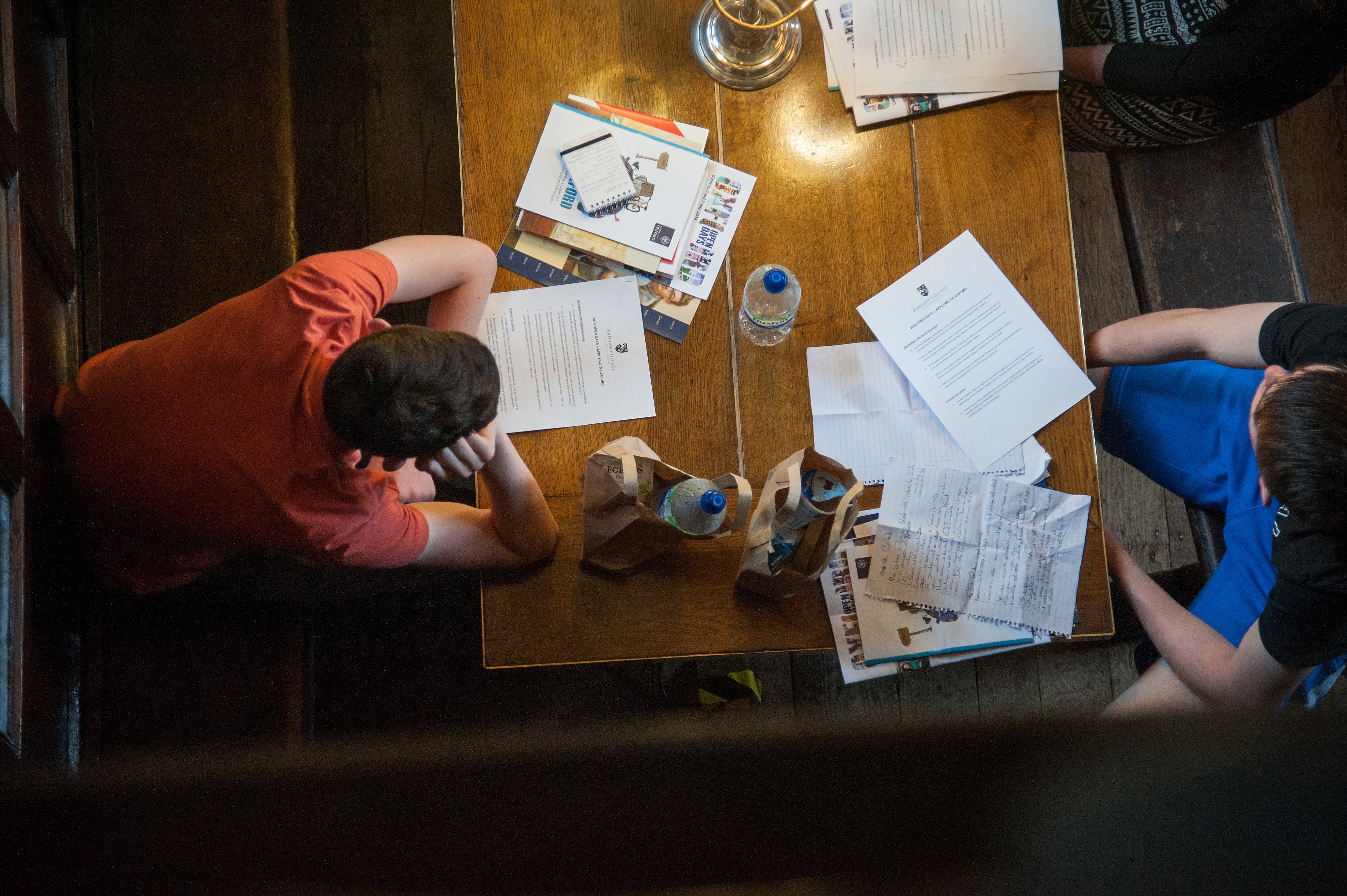

Fridays@2 continues in L1 this week with: Where does collaborating end and plagiarising begin? (https://www.maths.ox.ac.uk/node/70563)

Fridays@2 continues in L1 this week with: Where does collaborating end and plagiarising begin? (https://www.maths.ox.ac.uk/node/70563)

For those of you (all?) who follow our social media we have now joined Bluesky as 'oxfordmathematics', though we are not leaving X as we don't want to abandon nearly 70,000 followers.

We haven't posted on Bluesky yet, not least because much of our content is now video and Bluesky has a small video file size. But we will.

Warwick Mathematics Institute, University of Warwick

24-25 April 2025

Registration open until 28 March

14:00

Multilevel Monte Carlo Methods with Smoothing

Abstract

Parameters in mathematical models are often impossible to determine fully or accurately, and are hence subject to uncertainty. By modelling the input parameters as stochastic processes, it is possible to quantify the uncertainty in the model outputs.

In this talk, we employ the multilevel Monte Carlo (MLMC) method to compute expected values of quantities of interest related to partial differential equations with random coefficients. We make use of the circulant embedding method for sampling from the coefficient, and to further improve the computational complexity of the MLMC estimator, we devise and implement the smoothing technique integrated into the circulant embedding method. This allows to choose the coarsest mesh on the first level of MLMC independently of the correlation length of the covariance function of the random field, leading to considerable savings in computational cost.

Please note; this talk is hosted by Rutherford Appleton Laboratory, Harwell Campus, Didcot, OX11 0QX

The Centre for Teaching and Learning invites staff to apply for the new Oxford Teaching, Learning and Educational Leadership Recognition Scheme, following its successful reaccreditation by Advance HE. The new scheme includes a strong emphasis on recognition and educational leadership, and is designed for staff who teach and support learning to achieve fellowships of the Higher Education Academy.

A reminder that prelims corner is taking place every Monday at 11am in the South Mezzanine!

A reminder that prelims corner is taking place every Monday at 11am in the South Mezzanine!