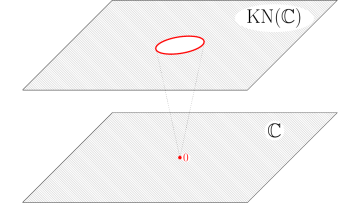

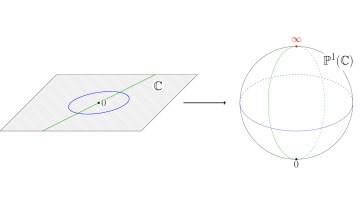

The slope of a link computed via C-complexes

Abstract

Together with Alex Degtyarev and Vincent Florence we introduced a new link invariant, called slope, of a colored link in an integral homology sphere. In this talk I will define the invariant, highlight some of its most interesting properties as well as its relationship to Conway polynomials and to the Kojima–Yamasaki eta-function. The stress in this talk will be on our latest computational progress: a formula to calculate the slope from a C-complex.