Robust synchronization of a class of chaotic networks

Čelikovský, S

Lynnyk, V

Chen, G

Journal of the Franklin Institute

volume 350

issue 10

2936-2948

(Dec 2013)

Composite centrality: A natural scale for complex evolving networks

Joseph, A

Chen, G

Physica D Nonlinear Phenomena

volume 267

58-67

(Jan 2014)

Trajectory tracking on complex networks via neural sliding-mode pinning control

Vega, C

Suarez, O

Sanchez, E

Chen, G

2017 IEEE INTERNATIONAL CONFERENCE ON SYSTEMS, MAN, AND CYBERNETICS (SMC)

995-1000

(2017)

https://www.webofscience.com/api/gateway?GWVersion=2&SrcApp=elements_prod_1&SrcAuth=WosAPI&KeyUT=WOS:000427598701005&DestLinkType=FullRecord&DestApp=WOS_CPL

Random vortex dynamics via functional stochastic differential equations

Qian, Z

Suli, E

Zhang, Y

Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences

volume 478

issue 2266

(05 Oct 2022)

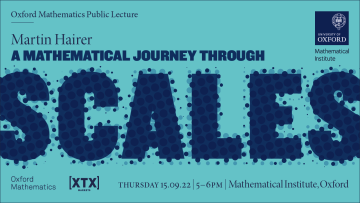

The tiny world of particles and atoms and the gigantic world of the entire universe are separated by about forty orders of magnitude. As we move from one to the other, the laws of nature can behave in drastically different ways, sometimes obeying quantum physics, general relativity, or Newton’s classical mechanics, not to mention other intermediate theories.

Stochastic modelling of African swine fever in wild boar and domestic pigs: epidemic forecasting and comparison of disease management strategies

Dankwa, E

Lambert, S

Hayes, S

Thompson, R

Donnelly, C

Epidemics

volume 40

(13 Aug 2022)

Multifidelity multilevel Monte Carlo to accelerate approximate Bayesian parameter inference for partially observed stochastic processes

Warne, D

Prescott, T

Baker, R

Simpson, M

Journal of Computational Physics

volume 469

(15 Nov 2022)

Neck pinches along the Lagrangian mean curvature flow of surfaces

Lotay, J

Schulze, F

Székelyhidi, G

(23 Aug 2022)

Density of GeV muons in air showers measured with IceTop

Abbasi, R

Ackermann, M

Adams, J

Aguilar, J

Ahlers, M

Ahrens, M

Alameddine, J

Alves, A

Amin, N

Andeen, K

Anderson, T

Anton, G

Argüelles, C

Ashida, Y

Axani, S

Bai, X

V., A

Barwick, S

Bastian, B

Basu, V

Baur, S

Bay, R

Beatty, J

Becker, K

Tjus, J

Beise, J

Bellenghi, C

Benda, S

BenZvi, S

Berley, D

Bernardini, E

Besson, D

Binder, G

Bindig, D

Blaufuss, E

Blot, S

Boddenberg, M

Bontempo, F

Borowka, J

Böser, S

Botner, O

Böttcher, J

Bourbeau, E

Bradascio, F

Braun, J

Brinson, B

Bron, S

Brostean-Kaiser, J

Browne, S

Burgman, A

Burley, R

Busse, R

Campana, M

Carnie-Bronca, E

Chen, C

Chen, Z

Chirkin, D

Choi, K

Clark, B

Clark, K

Classen, L

Coleman, A

Collin, G

Conrad, J

Coppin, P

Correa, P

Cowen, D

Cross, R

Dappen, C

Dave, P

De Clercq, C

DeLaunay, J

López, D

Dembinski, H

Deoskar, K

Desai, A

Desiati, P

de Vries, K

de Wasseige, G

de With, M

DeYoung, T

Diaz, A

Díaz-Vélez, J

Dittmer, M

Dujmovic, H

Dunkman, M

DuVernois, M

Ehrhardt, T

Eller, P

Engel, R

Erpenbeck, H

Evans, J

Evenson, P

Fan, K

Fazely, A

Fedynitch, A

Feigl, N

Fiedlschuster, S

Fienberg, A

Finley, C

Fischer, L

Fox, D

Franckowiak, A

Friedman, E

Fritz, A

Fürst, P

Gaisser, T

Gallagher, J

Ganster, E

Garcia, A

Garrappa, S

Gerhardt, L

Ghadimi, A

Glaser, C

Glauch, T

Glüsenkamp, T

Gonzalez, J

Goswami, S

Grant, D

Grégoire, T

Griswold, S

Günther, C

Gutjahr, P

Haack, C

Hallgren, A

Halliday, R

Halve, L

Halzen, F

Minh, M

Hanson, K

Hardin, J

Harnisch, A

Haungs, A

Hebecker, D

Helbing, K

Henningsen, F

Hettinger, E

Hickford, S

Hignight, J

Hill, C

Hill, G

Hoffman, K

Hoffmann, R

Hoshina, K

Huang, F

Huber, M

Huber, T

Hultqvist, K

Hünnefeld, M

Hussain, R

Hymon, K

In, S

Iovine, N

Ishihara, A

Jansson, M

Japaridze, G

Jeong, M

Jin, M

Jones, B

Kang, D

Kang, W

Kang, X

Kappes, A

Kappesser, D

Kardum, L

Karg, T

Karl, M

Karle, A

Katz, U

Kauer, M

Kellermann, M

Kelley, J

Kheirandish, A

Kin, K

Kintscher, T

Kiryluk, J

Klein, S

Koirala, R

Kolanoski, H

Kontrimas, T

Köpke, L

Kopper, C

Kopper, S

Koskinen, D

Koundal, P

Kovacevich, M

Kowalski, M

Kozynets, T

Kun, E

Kurahashi, N

Lad, N

Gualda, C

Lanfranchi, J

Larson, M

Lauber, F

Lazar, J

Lee, J

Leonard, K

Leszczyńska, A

Li, Y

Lincetto, M

Liu, Q

Liubarska, M

Lohfink, E

Mariscal, C

Lu, L

Lucarelli, F

Ludwig, A

Luszczak, W

Lyu, Y

Y., W

Madsen, J

Mahn, K

Makino, Y

Mancina, S

Mariş, I

Martinez-Soler, I

Maruyama, R

McCarthy, S

McElroy, T

McNally, F

Mead, J

Meagher, K

Mechbal, S

Medina, A

Meier, M

Meighen-Berger, S

Micallef, J

Mockler, D

Montaruli, T

Moore, R

Morse, R

Moulai, M

Naab, R

Nagai, R

Naumann, U

Necker, J

Nguyễn, L

Niederhausen, H

Nisa, M

Nowicki, S

Pollmann, A

Oehler, M

Oeyen, B

Olivas, A

O’Sullivan, E

Pandya, H

Pankova, D

Park, N

Parker, G

Paudel, E

Paul, L

de los Heros, C

Peters, L

Peterson, J

Philippen, S

Pieper, S

Pittermann, M

Pizzuto, A

Plum, M

Popovych, Y

Porcelli, A

Rodriguez, M

Pries, B

Przybylski, G

Raab, C

Rack-Helleis, J

Raissi, A

Rameez, M

Rawlins, K

Rea, I

Rechav, Z

Rehman, A

Reichherzer, P

Reimann, R

Renzi, G

Resconi, E

Reusch, S

Rhode, W

Richman, M

Riedel, B

Roberts, E

Robertson, S

Roellinghoff, G

Rongen, M

Rott, C

Ruhe, T

Ryckbosch, D

Cantu, D

Safa, I

Saffer, J

Herrera, S

Sandrock, A

Santander, M

Sarkar, S

Satalecka, K

Schaufel, M

Schieler, H

Schindler, S

Schmidt, T

Schneider, A

Schneider, J

Schröder, F

Schumacher, L

Schwefer, G

Sclafani, S

Seckel, D

Seunarine, S

Sharma, A

Shefali, S

Shimizu, N

Silva, M

Skrzypek, B

Smithers, B

Snihur, R

Soedingrekso, J

Soldin, D

Spannfellner, C

Spiczak, G

Spiering, C

Stachurska, J

Stamatikos, M

Stanev, T

Stein, R

Stettner, J

Stezelberger, T

Stürwald, T

Stuttard, T

Sullivan, G

Taboada, I

Ter-Antonyan, S

Thwaites, J

Tilav, S

Tischbein, F

Tollefson, K

Tönnis, C

Toscano, S

Tosi, D

Trettin, A

Tselengidou, M

Tung, C

Turcati, A

Turcotte, R

Turley, C

Twagirayezu, J

Ty, B

Elorrieta, M

Valtonen-Mattila, N

Vandenbroucke, J

van Eijndhoven, N

Vannerom, D

van Santen, J

Veitch-Michaelis, J

Verpoest, S

Walck, C

Wang, W

Watson, T

Weaver, C

Weigel, P

Weindl, A

Weiss, M

Weldert, J

Wendt, C

Werthebach, J

Weyrauch, M

Whitehorn, N

Wiebusch, C

Williams, D

Wolf, M

Wrede, G

Wulff, J

Xu, X

Yanez, J

Yildizci, E

Yoshida, S

Yu, S

Yuan, T

Zhang, Z

Zhelnin, P

Physical Review D

volume 106

issue 3

032010

(01 Aug 2022)