Dimensionality Reduction Techniques for Big Data Optimisation

- Researcher: Zhen Shao

- Academic Supervisor: Coralia Cartis

- Industrial Supervisors: Jan Fiala

Background

NAG (Numerical Algorithm Group Ltd.) are interested in the development of scalable algorithms for large optimization problems which arise during nonlinear data fitting. In such problems, the objective is to determine the set of parameters values that minimizes the error between the outputs of a parameter-dependent model and a set of experimental measurements/data points. Modern data fitting problems, such as numerical weather forecasting and machine learning, involve hundreds of millions of measurements/data points whereas the current state of the art optimization methods are limited to problems with only thousands of measurements/data points. As a result, much of the available data is currently unutilized in the data fitting procedure.

The overall aim of my project is to investigate dimensionality reduction techniques for optimization problems and develop general scalable optimization solvers for non-linear data fitting and other big data optimization problems.

Figure 1. Reduce dimensionality by projection.

Progress

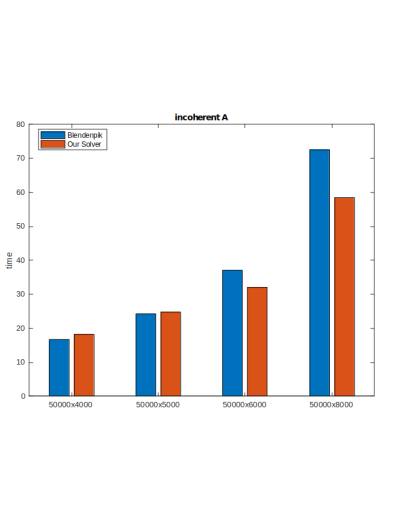

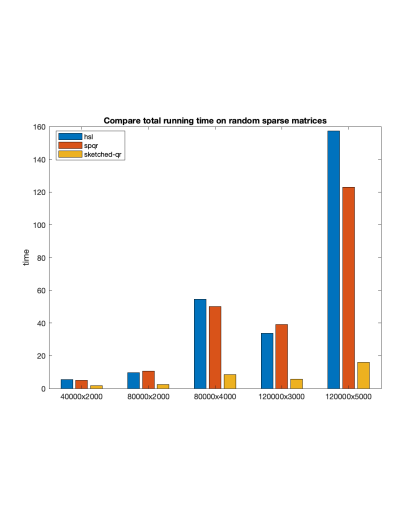

So far, the main focus has been dimensionality reduction for linear least square problems, where the model depends linearly on the parameters. We developed a generic solver for large scale linear least square problems that deal with both dense and sparse matrix inputs. For random dense inputs, our solvers are faster than the state-of-the-art dimensionality reduction solver (Blendenpik), as displayed in Figure 2 (left). For random sparse inputs, our solvers are significantly faster than the state-of-the-art direct solver (SPQR) and the preconditioned iterative solver (HSL) on ill conditioned random sparse matrices, as displayed in Figure 2 (right). Our algorithm also performs better than SPQR and HSL on large, very over-determined matrices from the Florida matrix collection test sets.

Figure 2. Comparison of computation times for random dense inputs (left) and ill-conditioned random sparse matrices (right).

Figure 2. Comparison of computation times for random dense inputs (left) and ill-conditioned random sparse matrices (right).

Future Work

The next step is to extend the dimensionality reduction techniques we have employed for the linear least square case to the more general non-linear least squares, as well as trying to reduce the number of variables. The solvers will have many applications, like speed up training in machine learning problems or financial model calibrations.